TrueNAS Scale 2: Electic Eel-aloo ft Traefik

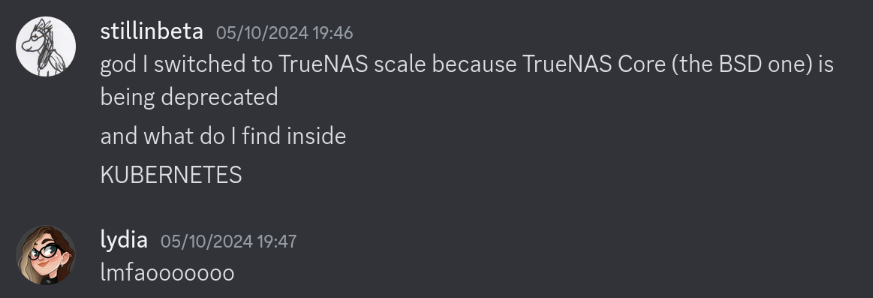

Right as I got TrueNAS Kubernetes Edition working, it became clear that it wasn't going to last long. All future development for Apps was in a docker-compose direction

Purported advantages include lower overhead and simpler configuration. For me, the advantage was no longer having to deal with Kubernetes in my free time. Docker isn't really that much better, but we have to start somewhere.

The Router

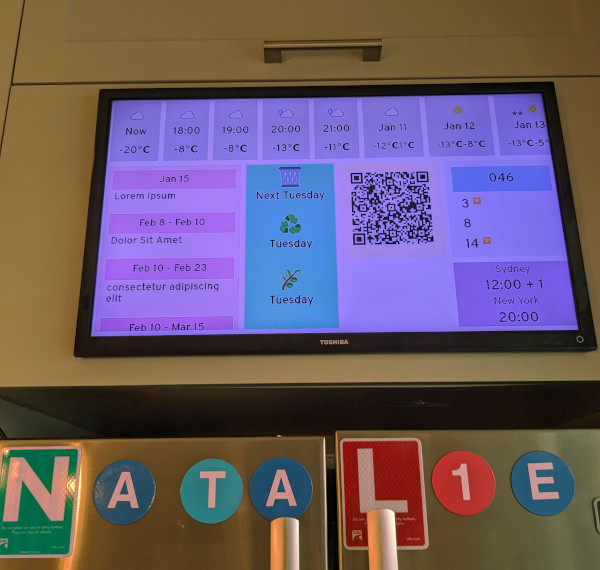

Out of the box, the way you access TrueNAS applications by port number.

I just need to remember that say, Plex is on :32400,

my household display is on :9045, PhotoPrism is on :20800 etc

That's annoying!

What I want is to go to plex.montero.<domain>,

or den-tv.montero.<domain>. There's a couple steps we need for this.

First, we need access to port 80.

By default, the TrueNAS Web UI is on :80 and :443,

but that can be changed.

Next, we need *.montero.<domain> to resolve to montero.

I have a Ubiquiti router, so I just added a A Record for *.montero.<domain>.

Finally, we need a reverse proxy to actually route our requests.

Nginx Proxy Manager

This was what I initially chose. It's in the TrueNAS repos, so I figured it must be well supported. And it worked just fine!

But I didn't really like it. For one, it had an account system I didn't need. And for two, it was all manually configured via a Web UI. That's its defining feature! But I don't need it.

Traefik

I mostly associate Traefik with Kubernetes, but it's got another neat trick up its sleeve: A Docker provider.

The way this works is pretty slick: It'll look at all the running containers, grab the first port they expose, and set up a router to target it.

We need to configure a lot, so we'll use docker-compose YAML.

To make this work, we first bind-mount the Docker socket:

services: traefik: image: traefik:v3 volumes: - type: bind source: '/var/run/docker.sock' target: '/var/run/docker.sock'

Then we just set some env variables:

services: traefik: <snip> environment: # set up the dashboard TRAEFIK_API_INSECURE: 'true' # regular server on port 80 TRAEFIK_ENTRYPOINTS_HTTP_ADDRESS: ':80' # admin UI on port 15000 TRAEFIK_ENTRYPOINTS_TRAEFIK_ADDRESS: ':15000' # enable the docker provider TRAEFIK_PROVIDERS_DOCKER: 'true'

And voilà, we can magically access any of the containers running!

root@montero[~]# curl -IH 'Host: jellyfin-ix-jellyfin' http://localhost/web/ HTTP/1.1 200 OK Accept-Ranges: bytes Content-Length: 9723 Content-Type: text/html Date: Mon, 02 Dec 2024 05:57:22 GMT Etag: "1db3a353dad17fb" Last-Modified: Tue, 19 Nov 2024 03:43:48 GMT Server: Kestrel X-Response-Time-Ms: 116.9131

Nicer Hostnames

Now, jellyfin-ix-jellyfin isn't especially pleasant.

We could use docker labels to specify a hostname,

but a) not every TrueNAS app lets you add labels and

b) we want to do less manual work!

Instead, we can set up a defaultRule.

The docs say:

It must be a valid Go template, and can use sprig template functions. The container name can be accessed with the

ContainerNameidentifier. The service name can be accessed with theNameidentifier.

With a little regex magic, we can make our labels a little nicer:

services: traefik: environment: TRAEFIK_PROVIDERS_DOCKER_DEFAULTRULE: >- {{ regexReplaceAll "([a-z-]+)-ix.*" .Name "Host(`$1.montero.house.local`) || Host(`$1.montero`)" }}

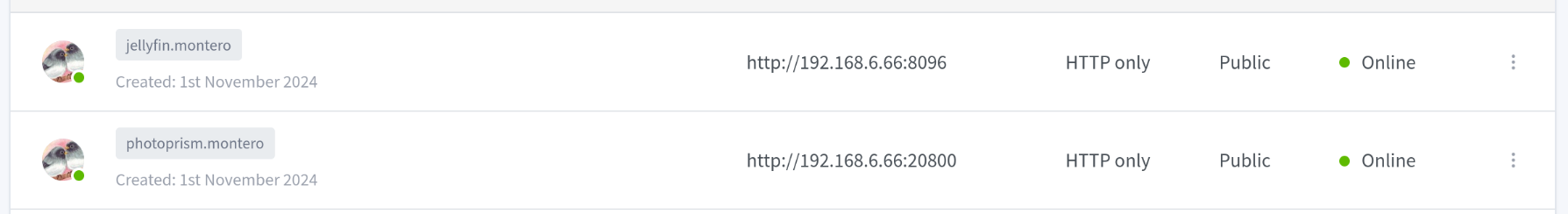

And here we go! Accessible exactly as we want!

$ curl -I http://jellyfin.montero/web/ HTTP/1.1 200 OK Accept-Ranges: bytes Content-Length: 9723 Content-Type: text/html Date: Mon, 02 Dec 2024 06:11:05 GMT Etag: "1db3a353dad17fb" Last-Modified: Tue, 19 Nov 2024 03:43:48 GMT Server: Kestrel X-Response-Time-Ms: 0.1094

Configuration

Now, this doesn't always work perfectly. Plex, for example, exposes multiple ports, so Traefik needs a little help to find the right one. We do this with a Docker Label:

traefik.http.services.plex.loadbalancer.server.port=32000

For docker-compose services, we can just drop in labels files directly, especially if they don't match the ix scheme:

labels: - traefik.http.services.transmission.loadbalancer.server.port=9001 - >- traefik.http.routers.transmission.rule=Host(`transmission.montero.house.local`) || Host(`transmission.montero`)

TrueNAS itself

Ideally, we could access the TrueNAS web interface through our proxy as well.

truenas.montero ought to work, at least if we're not futzing with the router.

But TrueNAS doesn't run in Docker, so our regular discovery mechanism doesn't work.

The File provider

The solution I settled on is… inelegant, but servicable.

Traefik has two kinds of configuration: Static configuration and dynamic configuration. We're using env variables to control static configuration: what port to serve on, how to name services, other stuff that won't change.

But "services" (Traefik backend servers) and "routers" (Frontend rule sets) are dynamic. So even though ours won't change, we need to use a dynamic configuration provider.

The only manual ones available File and HTTP. Thus, to add our special case entry, we need to create a file.

Here's our basic declaration:

http: services: TrueNAS: loadbalancer: servers: - url: http://192.168.6.66:8080 routers: TrueNAS: entrypoints: - http service: TrueNAS rule: "Host(`truenas.montero`) || Host(`truenas.montero.house.local`)"

We'll make use of Docker Compose configs.

services: traefik: environment: TRAEFIK_PROVIDERS_FILE_FILENAME: '/tmp/traefik-truenas.yaml' configs: - source: truenas_config target: '/tmp/traefik-truenas.yaml' configs: truenas_config: content: | http: services: TrueNAS: loadbalancer: servers: - url: http://192.168.6.66:8080 routers: TrueNAS: entrypoints: - http service: TrueNAS rule: >- Host(`truenas.montero`) || Host(`truenas.montero.house.local`)

That's right. just stick it in the YAML. This keeps everything we need in one place.

Now we can access the UI:

$ curl -I http://truenas.montero/ui/ HTTP/1.1 200 OK <snip>

Conclusion

I'm very happy with this set up. Any new service I turn up on my NAS will automatically become routable. No (further) configuration needed!

Appendix: docker-compose.yaml

services: traefik: image: traefik:v3 environment: TRAEFIK_API_INSECURE: 'true' TRAEFIK_ENTRYPOINTS_HTTP_ADDRESS: ':80' TRAEFIK_ENTRYPOINTS_TRAEFIK_ADDRESS: ':15000' TRAEFIK_PROVIDERS_DOCKER: 'true' TRAEFIK_PROVIDERS_DOCKER_DEFAULTRULE: >- {{ regexReplaceAll "([a-z-]+)-ix.*" .Name "Host(`$1.montero.house.local`) || Host(`$1.montero`)"}} TRAEFIK_PROVIDERS_FILE_FILENAME: '/tmp/traefik-truenas.yaml' volumes: - type: bind source: '/var/run/docker.sock' target: '/var/run/docker.sock' configs: - source: truenas_config target: '/tmp/traefik-truenas.yaml' network_mode: 'host' configs: truenas_config: content: | http: services: TrueNAS: loadbalancer: servers: - url: http://192.168.6.66:8080 routers: TrueNAS: entrypoints: - http service: TrueNAS rule: >- Host(`truenas.montero`) || Host(`truenas.montero.house.local`)